On Sunday night, Oct 29th, we learned that most US equities markets would be closed the next day due to Hurricane Sandy. This is very unusual: the only times the market has been closed since the four days following 9/11 have been the National Days of Mourning following the deaths of Presidents Reagan and Ford, and those were announced with several business days’ lead time. The last time the markets have been closed due to weather was all the way back in 1985. More to the point, this was the first unplanned market closure since we’d been trading.

Now, if you were to ask a software engineer to design a system today to handle the timing of market holidays or unplanned close days, you’d probably eventually end up with something like a “market hours” service that predicts when the market will close, whether the market is expected to open today, and uses some state synchronized in Zookeeper so that the entire system always has a consistent view of whether the market is open, and can block waiting for the market to open.

If you actually ask that same software engineer to knock something out that will get the job done 99% of the time, you’ll end up with something like how we do it at Wealthfront — a single global Market object with a hard-coded list of holidays. (Never fear, DI fans: it’s injected so that we can replace it with a market that’s open or closed for testing purposes.)

We also have a test to ensure we update it yearly.

This has served us well for over four years. As a small startup, we take the principle of “you ain’t gonna need it” (YAGNI) to heart. Now we have a natural disaster coming after us in just the place where we took the principle of “you ain’t gonna need it” (“YAGNI”) to the extreme. Fortunately, one area where we could be accused of over-engineering is in our test and continuous deployment infrastructure.

The change is trivial — add a line to holidays. Our tests aren’t really going to be aware of the change. Even though it’s embedded in code, it’s really a data change. The tests do ensure that I didn’t introduce a typo when making the change, or accidentally committed unrelated broken code. They also ensure that the build was passing before I made my change. If we didn’t have a trunk-stable policy and someone had left the build broken on Friday, I wouldn’t have been able to quickly make these changes on Sunday night.

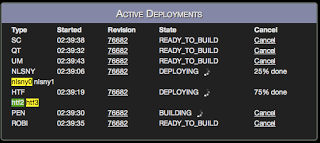

Now that I have a working build, I need to deploy it to all the machines that care about whether the market is open. This is a pretty long list, including our price feed, our actual trading system, and the SuperCruncher that calculates account performance for the dashboards. Since our deployments are completely automated, including rollback if something is degraded, I don’t need a war room full of sys admins to make the change.

“YAGNI” is a good principle, but only if you’re flexible enough to handle any changes when you do need it, on the right time scale. This same flexibility served us well again on Oct 31 when we needed to switch to our backup price feed after our servers in New York started having problems from the datacenter running on generators for a week while the basement was flooded. Continuous deployment and automated testing don’t sound like solutions to disaster planning, but what you gain is being able to make unplanned changes to react to unforeseen circumstances.