Recently, we found visual regressions in our app after we shipped the app to production on the play store and that is one of the last places you want to catch a bug. We wanted to improve when we detect these bugs and address them way earlier in the development process. There are a couple of ways to go about this. One option is to do a manual QA session before every single release but this does not scale since we heavily invest in continuous deployment and release our app every single week. That would mean investing in a lot of QA hours a week. At the same time, we are huge believers in TDD and automated tests. So the option of going through the app manually is a non-starter. The other option is to build a suite of automated tests that we run every single time we build master. This allows us to automatically catch issues without investing time and scales well with any number of releases. And so we invested efforts into building this infrastructure which could help catch these class of issues early and in an automated fashion.

Detecting a visual regression

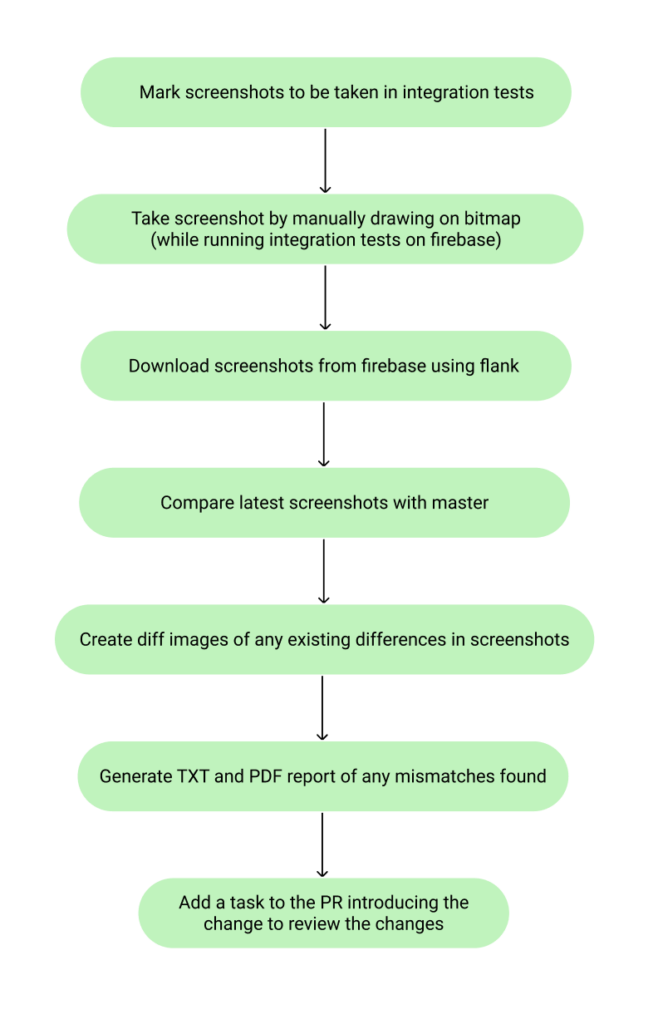

The basic idea behind detecting a visual regression is to do pixel comparison between screenshots of the same view in two different versions of the app. This allows us to automatically report any changes in the appearance of the app much earlier in the development process for review. Here’s the flow that the visual regression infrastructure follows,

Taking the Screenshot

The first and most important part of visual regression is capturing the screenshot that we want to compare. And for that, we looked at many open source solutions out there but nothing seemed to fit our constraints or were non-deterministic which lead to a lot of flakiness when taking screenshots. To address those issues, we built our own screenshotting framework called ScreenCaptor. With screencaptor, we build into the framework a lot of components to help make screenshots a lot more deterministic and repeatable, so that we can do a comparison and detect regressions.

With ScreenCaptor, you can take a screenshot like so

We have now open-sourced this library and you can find it here. This library makes capturing screenshots more deterministic with a couple of techniques,

Manually drawing on a Canvas

Instead of using AndroidX’s implementation of screenshot which utilizes the UiAutomator to take a screenshot, we manually draw the screen’s content onto a bitmap canvas. This is a much more direct approach and ultimately leads to the screenshot process being the most native and close to the metal.

Handling Dynamic data within views

This is a pretty common case that we run into where we have a dynamic piece of data displayed on the view that might change with every run of the test. The classic example is any date that gets displayed on the screen. And for this, we have a way of disabling/hiding these views when we take the screenshot using the ViewVisibilityModifier. This turns the views to be INVISIBLE right before taking the screenshot and turns the views to their initial state after the screenshot is taken.

Disabling cursor and scrollbars

Cursors and scrollbars show up in EditTexts and ScrollViews in an inconsistent manner and having that enabled means that it might make our screenshots more flaky. So to address that issue, we have the ViewTreeMutator which walks through the view hierarchy and disables scrollbars and cursors whenever applicable.

These techniques allow our screenshot framework to be free of flakiness as much as possible and are pretty extensible.

Collecting the screenshots

As we use firebase for running our integration tests, we need a way to download the screenshots from the firebase devices into our local build directory so that we can do our pixel comparison. And for this flank comes in handy where we can specify the path of the files to be pulled from the emulators and downloaded from firebase.

Pixel comparison

Once we have the screenshots downloaded, it’s pretty straightforward to compare the screenshots. We used an open source solution to do pixel comparison and give us a result of whether the two screenshots provided a match for each pixel or in the case that there is any difference, the framework automatically highlights the differences by annotating them providing a new image. Here’s an example of how a difference might look like,

Regression reporting

Now that we have all the pieces of the puzzle set up, the last part was to just put it together and generate the report which would then be emailed to the team and also archived on Jenkins for viewing in the future. And for that, we decided we wanted two different variants of the reports. One would be a text table that has the names of all the screenshots and whether there is a match or mismatch. This would be always generated whether a mismatch is found or not.

We also wanted an optional PDF report that will get generated whenever there is a mismatch in the screenshot. This PDF contains the details about the versions compared along with all the mismatched screenshots. This report is then emailed to the team and we also alert the on-call engineer by paging them for any failures on our separate visual regression builds.

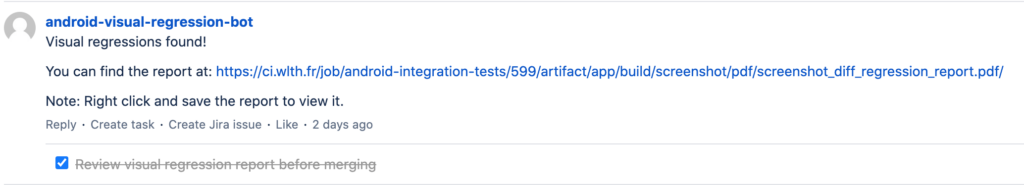

We also wanted to report visual bugs earlier in the development process. As in, even before the code gets merged into master. For this we started running a lighter version of the visual regression builds on our side branch builds and reported the visual regressions through our custom stash reporter. This reporter uses the stash rest API to add a task with the visual regression report linked (as seen below) to our PR in order to make sure we review the report before merging the PR.

Conclusion

This infrastructure gives us the confidence that any visual change we make to the app will be reviewed before we ship to production. This practice of automated testing for visual bugs allows us to make global changes to the app like changing a reusable component in the app or changing a reused color in the app with confidence. Overall, this allows us to move fast without breaking things.

Disclosure

This communication has been prepared solely for informational purposes only. Nothing in this communication should be construed as an offer, recommendation, or solicitation to buy or sell any security or a financial product. Any links provided to other server sites are offered as a matter of convenience and are not intended to imply that Wealthfront or its affiliates endorses, sponsors, promotes and/or is affiliated with the owners of or participants in those sites, or endorses any information contained on those sites, unless expressly stated otherwise.

Wealthfront offers a free software-based financial advice engine that delivers automated financial planning tools to help users achieve better outcomes. Investment management and advisory services are provided by Wealthfront Advisers LLC, an SEC registered investment adviser, and brokerage related products are provided by Wealthfront Brokerage LLC, a member of FINRA/SIPC.

Wealthfront, Wealthfront Advisers and Wealthfront Brokerage are wholly owned subsidiaries of Wealthfront Corporation.

© 2020 Wealthfront Corporation. All rights reserved.