At Wealthfront, the A/B test is one of our most powerful decision-making tools. It helps us make decisions under uncertainty, and learn about how our clients are reacting to new features we build. Our own framework has been a particularly featured one, supporting Bayesian inference methods, continuous stopping criteria, check metrics, and multiple variants.

Recently, however, we found some our A/B tests were taking weeks, or even months to resolve. This was slowing down our ability to learn from our data, and to decide what the next version of a feature should look like. In Part 1 of this blog post, I’ll talk about why this problem arises when trying to build a highly-featured A/B testing infrastructure, and in Part 2 I’ll talk about how we deal with this problem at Wealthfront.

What is Robustness?

To frame this discussion, I want to define a relevant engineering principle, robustness. In a general sense, robustness is the ability of a system to perform well when faced with a great variety of inputs. For example,

is less robust than

because mean1 is prone to overflow if a + b is greater than the maximum integer. In practice, mean1 computes the correct average of two integers for most practical cases, but because it cannot handle as wide a variety of inputs, it is not as robust as mean2.

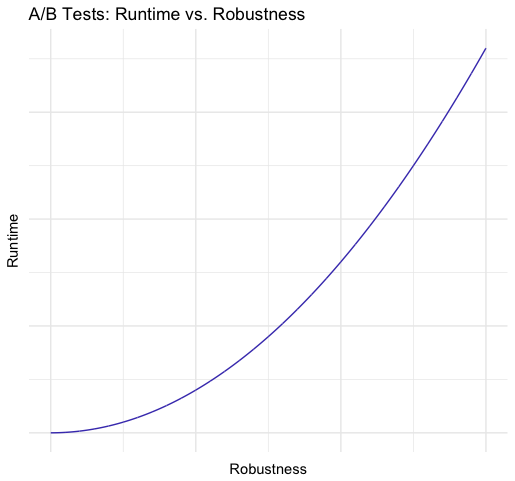

So robustness is a property we like to see in our code, all other things equal. Unfortunately, all other things are never equal. For one, robust code usually requires more engineering effort, sometimes to handle cases that can only occur less than 1% of the time. In other cases, it could impose more memory or computational requirements on the system. But the demands of a robust A/B testing framework are especially interesting and unique, since you pay for robustness with data.

Multiple Tests: The Robustness vs. Data Tradeoff

Let’s take one specific example. Suppose you are using statistical power methods to determine how many samples you need to collect before making a 95% significance decision about whether your variant outperforms your control. And you ask yourself, shouldn’t we be able to make a decision early, if the data we’ve collected shows that the variant outperforms the control by far?

So you propose a more robust system that can run two statistical tests, instead of one. One 95% significance test after you’ve collected half of the number of samples, and one 95% significance test after you’ve collected all the samples. If the first test doesn’t choose a winner, we just keep on collecting samples. If the first test does choose a winner, the experiment is already done, and we’ve saved ourselves a bunch of time! Seems great, right?

Unfortunately, since every test introduces its own possibility of a false positive or false negative, we’ve introduced more sources of error. This means the effective significance of the two tests put together is less than the significance of the individual tests. We can adjust for this by increasing the significance of each test. So you just bump up the required significance in your sample size calculator, and…

The test now requires more data. We’ve gained robustness, but for a price.

Usefulness of Robust A/B Testing

So multiple tests aren’t something we can get for free; we pay a cost in the form of additional data, which for a startup means we’re paying in time, sometimes on the scale of weeks or months. These are weeks or months that engineers, product managers, designers, and other members of our team could have spent building something new based on what we learned from the experiment.

Even so, there are other forms of robustness that can be pretty useful when running various types of A/B tests. The assumptions made by a standard A/B test are very strict, and it is rare that our desired test actually conforms to them. Remember that standard A/B tests assume that

- Our metrics have an underlying mean that does not vary with time

- We are only tracking one metric

- We only have one control and one variant

In reality, it is very rare that all of these are true. Metrics almost always vary with time, and sometimes significantly. One metric rarely captures everything we want to learn from an experiment. We often want to test out multiple versions of a feature to see which one resonates the most with clients. All these needs demand greater robustness in our A/B testing framework.

The Data Cost

But what is the impact on our data requirements, if we make our system more robust so it can take these situations into account? Here’s the impact of a few features that we’ve used at Wealthfront:

- Continuous stopping criteria, which use the confidence of our test result to determine whether we should stop the test or continue, are effectively running multiple tests, and increase error rates if we don’t adjust our desired significance

- Additional metrics introduce more sources of error just like multiple tests do, and therefore require that individual tests have higher significance to achieve the same overall significance.

- Multiple variants demand that each variant is compared to the control, as well as to other variants. This all-against-all comparison requires $\binom{n+1}{2} \propto n^2$ tests, where $n$ is the number of variants (see figure below). And with $n^2$ tests comes $n^2$ times as many samples we need to collect.

# of tests (edges) vs. # of variants (nodes)

With every feature, we result in greater data requirements. Generally, the looser the constraints on our A/B test, the more uncertainty we introduce, and the more data we need to collect in order to be as certain as we want to be about our test results.

Part 2: Impact on A/B Framework Design

In an ideal world, our A/B frameworks should be very robust. But if your organization doesn’t have the data to back it up, robustness can have a negative impact on the time it takes for an experiment to resolve. Practically, this means the same A/B testing strategies that work at data giants that can resolve experiments in minutes might not work at a smaller startup with low data flow. How do we deal with this at Wealthfront? See more in an upcoming Part 2 of this blog post!